Plug and play: Connect your data to an LLM with Needle

Since the introduction of the Model Context Protocol (MCP)*, I’ve been experimenting with different ways to connect company data to LLMs like GPT and Claude. My goal was to find a way to seamlessly link a wide variety of data sources (Google Drive, Notion, local storage, SQL) to an LLM.

And I think I’ve found a potential winner.

One of the most impressive platforms I’ve recently come across is Needle. While the UX and features aren’t perfect yet, the concept is spot on: a platform with standardized connectors to numerous data sources, all directly linked to one or more LLMs.

Challenge and solution

Rather than requiring custom code for each integration, MCP makes it possible to connect content repositories, business tools, and development environments through a single, unified protocol.

Once a connector is available, non-technical users can easily link it to their own data. For instance, take a look at Needle’s connector repository:

Within minutes, you can connect an external data source to an LLM. Once the platform gains access via the connector, it indexes the files. Currently, there’s no real-time connection between the data source and the LLM, but for most use cases, that’s not an issue. Scheduled synchronizations ensure that new files are indexed regularly.

Once data sources are connected, you can create a collection. This allows you to query only the data assigned to that collection.

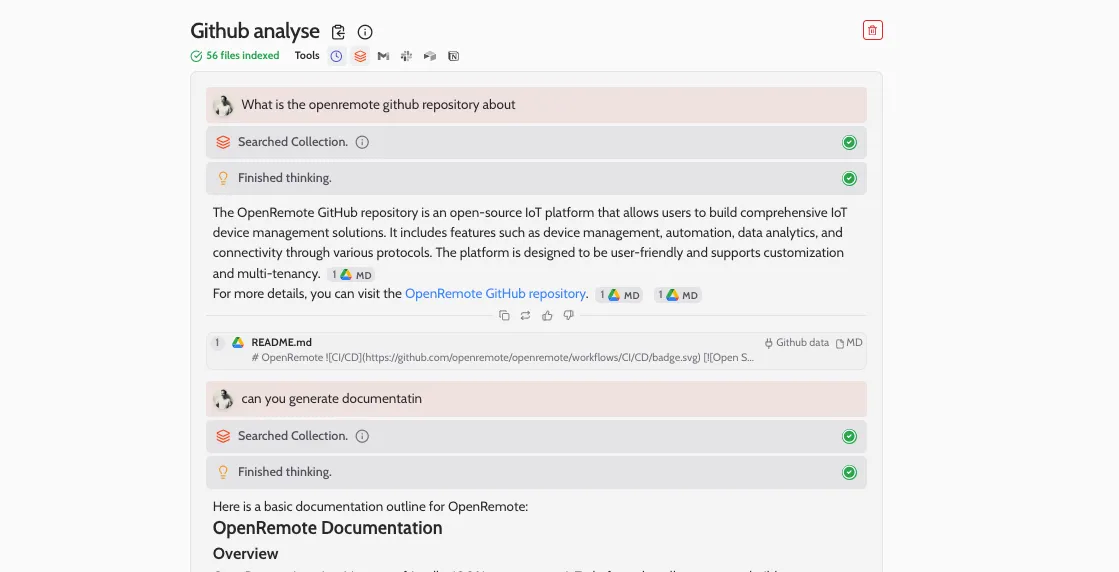

For example, I connected a GitHub repository to a collection, enabling me to ask questions about the code, functions, documentation, and more.

While the connected language model is a bit slow and not as powerful as GPT, the overall concept is solid.

A future with MCP

I see platforms like this becoming essential internal tools for businesses to unify their systems: financial, CRM, sales, operations etcetera. Eventually, there will be connectors for all major players like Salesforce, SAP, Exact, AFAS, Pipedrive, Notion, Microsoft, and Google.

A good analogy is the invention of the Bluetooth protocol. It allowed all kinds of wireless devices - mice, keyboards, speakers, computers, cars, phones - to connect regardless of their make or model, using a unified protocol. We need something similar for enterprise data systems.

A final thought: security and privacy concerns will inevitably arise with these integrations. Security professionals are likely to have their hands full assessing and testing these tools. And they should—because there will be many more to come.

*Model Context Protocol (MCP) is a standardized framework designed to integrate large language models (LLMs) with various data sources in a seamless and scalable way. Developed by Anthropic, MCP was created to solve the challenge of securely and efficiently connecting business tools, content repositories, and development environments to AI models. Instead of requiring custom-built integrations for each system, MCP provides a unified protocol that simplifies access to structured and unstructured data. This enables companies to leverage AI for deeper insights, automation, and decision-making without extensive manual setup.

.webp)

.webp)

.webp)

%20(1).webp)

.webp)

.webp)